A Probabilistic, Machine Learning Approach to Data Unification

Recently, I attended the 4th annual Big Data Toronto conference, designed to address the current challenges enterprises face when tackling Big Data, and the innovative solutions being offered to solve these problems. My favorite presentation over the course of the two days was given by Dan Waldner, Director of Customer Data at Scotiabank, titled: “MDM 2.0: A Smarter, Scalable Approach to Data Curation”. In his presentation, Dan discussed how Scotiabank leveraged a probabilistic, machine learning model approach to accelerate implementation of the company’s customer mastering / Know Your Customer (KYC) project.

Below is a summary of the presentation and project results, as well as my main takeaways from the discussion.

The Goal: Real-Time Analytic Insights

As we have seen from many of our customers and across multiple industries, technology decisions are no longer just IT projects–they need to tie to an underlying business driver in order to be successful. As such, one of the first points Dan made was about getting executive buy-in to implement this new approach to data curation. At Scotiabank, this project was aimed to alleviate the enterprise’s obstacles to delivering quality analytics–without a bedrock for solid data management in place, there was no guarantee of high quality analytic insights.

The objective of Scotiabank’s KYC project was to centralize customer data into one place where it could then be stitched together using a number of technologies to produce a Golden Record for each customer. The goal was to create a real-time channel that would deliver consistently up to date data that could be used across multiple case studies–eliminating the duplicate work that had been required to gain analytic insights in the past.

Challenges with Traditional MDM

As Dan highlighted in his presentation, there are a number of existing master data management (MDM) solutions on the market that offer deterministic (rules-based) approaches to solving this problem. The challenge that Scotiabank faced, however, was that it needed to unify 100 large systems with petabytes of data that had evolved over a significant period of time. The company had attempted to stitch these systems together in the past, but the complexity of the environment–the volume and variety of data–had proved too difficult a challenge to overcome.

Scotiabank was not alone in facing these challenges; in fact, Gartner estimates that up to 85% of MDM projects fail. The fact is, that with an environment this large, writing and implementing rules becomes incredibly complex. Every incremental source that needs to be added requires hundreds of rules to implement, and at some point this approach is just no longer feasible.

A Machine Learning Approach to Data Unification

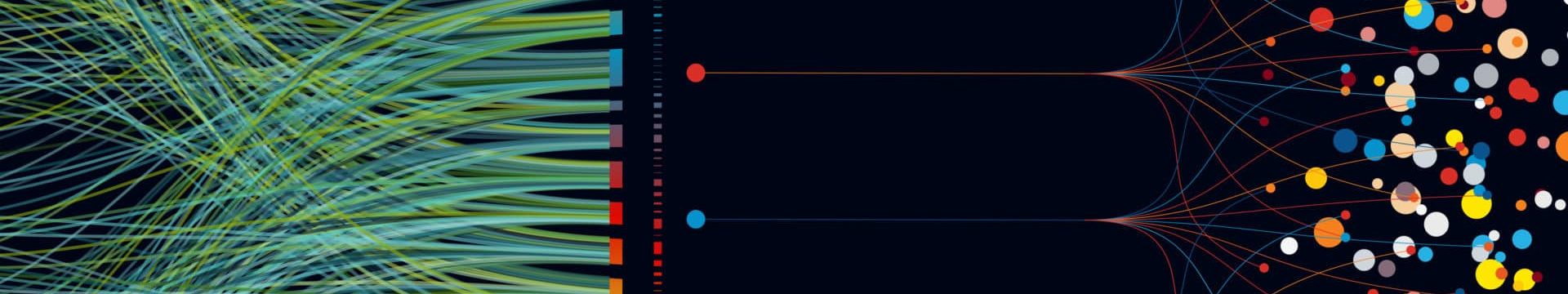

Dan and the team at Scotiabank created a ‘data factory’ that involved three essential steps: 1) aggregate data in a central location, 2) find the data that is needed and organize it into a common model for consumption, and 3) leverage a probabilistic, machine learning-based resolution tool to stitch together the various datasets from different systems.

This machine learning approach to data unification greatly reduced the amount of time and resources it took to add new data sources. In a matter of two days, Scotiabank completed the initial training of the system, whereby the model produced two entities that were believed to be the same and subject matter experts were asked to confirm or deny the match. Through this process, the machine learning model learned which attributes were correlated and which were not, and improved as it was continuously trained and corrected. In contrast to the rules-based approaches that Scotiabank had implemented in the past–which tend to break when too much data is thrown at them–the machine learning model improves with more data.

The result of this ‘data factory’ approach was that, in just over six months, Scotiabank ingested and profiled 35 large data sources with 3.7 million rows of data to produce 325,000 clusters of customer records. The team is now able to onboard a new system from landing data to mastery in just 5-7 days, and create a new Golden Record in a maximum of 2 days. The team is now looking into expanding this model into other important areas of the business within the next 6 to 12 months. Dan’s presentation was a great example of how probabilistic, machine learning-based approaches to data unification yield tremendous results in terms of time and cost savings. To learn more about how Tamr Unify can help your company solve Big Data challenges, schedule a demo.

Get a free, no-obligation 30-minute demo of Tamr.

Discover how our AI-native MDM solution can help you master your data with ease!